Understanding the Modern Landscape: MLOps and DevOps in 2024

In 2024, machine learning (ML) integration into significant projects has become even more widespread. Developers are no longer just building ML models; they ensure they are effectively deployed into production environments. This evolution highlights the growing importance of MLOps, or machine learning operations, which merges the principles of DevOps with the specialised requirements of ML model development and deployment.

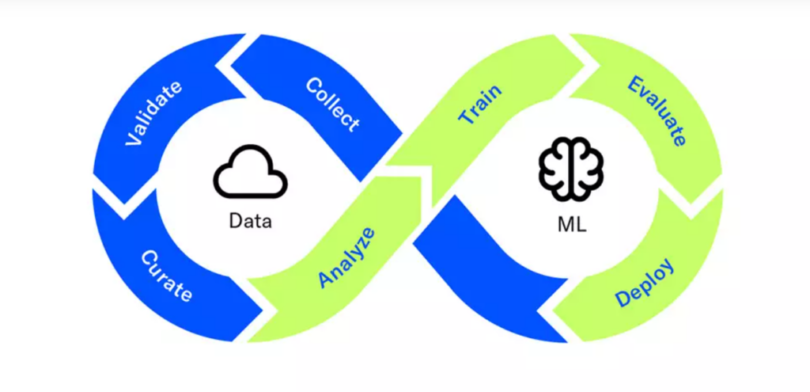

DevOps aims to improve the software development lifecycle by enhancing collaboration, automation, and efficiency among development and operations teams. MLOps builds on these principles but focuses on the challenges unique to ML workflows. This includes managing data pipeline training models and continuously monitoring their performance in production.

To remain competitive and advance in their careers, professionals should consider enrolling in comprehensive MLOps programs. Courses like the MLOps Course equip individuals with the skills to manage modern ML operations effectively. These programs teach participants to handle the complexities of ML workflows, preparing them for high-demand roles in this rapidly evolving field.

Let’s explore the intricacies of DevOps and MLOps, their characteristics, and how they intersect in modern project management.

What’s the ongoing buzz about MLOps?

Let’s delve into each of these MLOps tools and platforms:

- TensorFlow Extended (TFX):

TensorFlow Extended (TFX) is an end-to-end platform for deploying production-ready ML pipelines. It facilitates ML models’ development, training, validation, deployment, and maintenance. TFX integrates seamlessly with TensorFlow and other popular ML frameworks, providing a scalable solution for managing the ML lifecycle in real-world environments. Key features include built-in components for data validation, model analysis, and model serving, making it suitable for large-scale ML deployments.

- Kubeflow:

Kubeflow is a machine learning toolkit built on Kubernetes, designed to simplify the deployment and management of ML workflows in containerised environments. It provides tools and APIs for building, training, and deploying ML models at scale. Kubeflow’s architecture allows users to run experiments, automate model training, and deploy models as microservices, leveraging the scalability and portability of Kubernetes clusters. With support for distributed training and hyperparameter tuning, Kubeflow enables efficient ML workflow orchestration in production environments.

- Apache Airflow:

Apache Airflow is an open-source platform for orchestrating complex workflows and data pipelines. It enables users to define, schedule, and monitor tasks as directed acyclic graphs (DAGs), making it ideal for ML pipeline automation. Airflow’s extensible architecture allows integration with various ML frameworks and tools, facilitating the creation of end-to-end ML pipelines. Apache Airflow streamlines the development and management of ML workflows in heterogeneous environments by providing rich visualisation, monitoring, and scheduling capabilities.

- MLflow:

MLflow is an open-source platform for managing the ML lifecycle, covering experiment tracking, model packaging, and deployment. It offers a unified interface for tracking experiments, logging metrics, and organising model versions, making reproducing and comparing results easier. MLflow supports multiple Machine Learning frameworks, enabling users to train and deploy models using their preferred libraries. With built-in model registry and deployment tools, MLflow simplifies the process of transitioning models from experimentation to production, fostering collaboration and reproducibility in ML projects.

- Databricks:

Databricks is a unified analytics platform that combines data engineering, ML, and analytics capabilities in a collaborative environment. It provides a scalable infrastructure for building and deploying ML models, leveraging Apache Spark for distributed computing. Databricks offers integrated notebooks, libraries, and APIs for ML development, along with data visualisation and exploration tools. With support for model training, tuning, and deployment, Databricks enables data scientists and engineers to collaborate on ML projects and streamline the end-to-end workflow from data ingestion to model deployment.

- H2O.ai:

H2O.ai offers AI and machine learning solutions tailored for enterprise use cases, providing advanced algorithms and tools for model development and deployment. H2O.ai’s open-source platform, H2O, offers scalable machine learning algorithms and AutoML capabilities for rapid model prototyping and experimentation. Additionally, H2O Driverless AI provides an automated solution for feature engineering, model selection, and hyperparameter tuning, accelerating the model development process. With support for deploying models in production environments, H2O.ai empowers organisations to leverage AI for data-driven decision-making and business insights.

- AWS SageMaker:

Amazon SageMaker is a fully managed ML service that simplifies the process of building, training, and deploying ML models on the AWS cloud platform. SageMaker provides a comprehensive set of tools and APIs for data preprocessing, model training, and inference, allowing users to iterate on ML experiments quickly. With built-in algorithms and support for custom Docker containers, SageMaker accommodates a wide range of ML use cases, from computer vision to natural language processing. By abstracting away the complexities of infrastructure management, SageMaker enables data scientists and developers to focus on building high-quality ML models and deploying them at scale.

These platforms and tools play a crucial role in enabling organisations to implement MLOps practices effectively, ensuring the seamless integration of machine learning into their business operations. They empower teams to develop, deploy, and manage ML models efficiently in real-world environments by providing scalable infrastructure, automation capabilities, and collaboration features.

Mastering MLOps: Key Guidelines

Mastering MLOps requires adhering to essential best practices for seamlessly integrating machine learning into business operations. Here’s a breakdown of critical guidelines:

Embrace Automation: Automate MLOps processes to reduce human error and boost efficiency. This includes automating tasks like data preprocessing, model training and deployment, streamlining workflows, and speeding up development cycles.

Track Experiments and Versions: Implement robust experiment tracking and version control mechanisms to monitor model development, iterations, and performance metrics. This ensures reproducibility, transparency, and collaboration, aiding decision-making throughout the ML lifecycle.

Leverage CI/CD Pipelines: Deploy ML models using continuous integration and continuous deployment (CI/CD) pipelines for rapid and reliable updates to production environments. These pipelines automate testing, validation, and deployment, seamlessly integrating ML with existing development practices.

Design for Scalability and Performance: To meet production demands, develop and deploy ML models with scalability and performance. Optimise models for speed, efficiency, and resource utilisation to handle large data volumes and serve multiple users concurrently.

Following these best practices empowers organisations to harness MLOps effectively, driving innovation, accelerating time to market, and achieving success in the machine learning era.

Conclusion

MLOps, short for Machine Learning Operations, is the cornerstone for deploying, managing, and scaling machine learning models in production environments. By integrating DevOps principles with machine learning practices, MLOps streamlines the entire ML lifecycle, from development and training to deployment and monitoring.

A comprehensive MLOps Course ensures efficient, reliable, and scalable deployment of ML models while enabling continuous integration, delivery, and monitoring to sustain model performance. It fosters collaboration among data scientists, ML engineers, and IT operations teams, automating and optimising the end-to-end process of deploying and managing ML models in real-world applications. With MLOps, organisations can harness the full potential of machine learning, driving innovation and achieving business success in today’s data-driven landscape.